What is AI Mind?

AI Mind is a user-friendly web application that allows you to input an image link (address) into the interface. The app then fetches and processes the image using a machine learning model. This model identifies the parts of the image that it has already seen during its training, recognizing and labeling them.

The app then presents this output in the form of a nice wordcloud with the predictions that the model thinks are more likely having a bigger font and more prominence in the word cloud.

Showcase Video!

How can I use it?

You can visit AI-Mind right now! (Note: It might take upto a minute to register/login if the app hasn't been used for a while 🙂).

Why Build AI Mind?

I like building projects that just come to my mind. After learning about APIs in my Udemy course, I thought it would be super cool to "see" what an AI's mind might look like if I showed it an image.

How does AI Mind work?

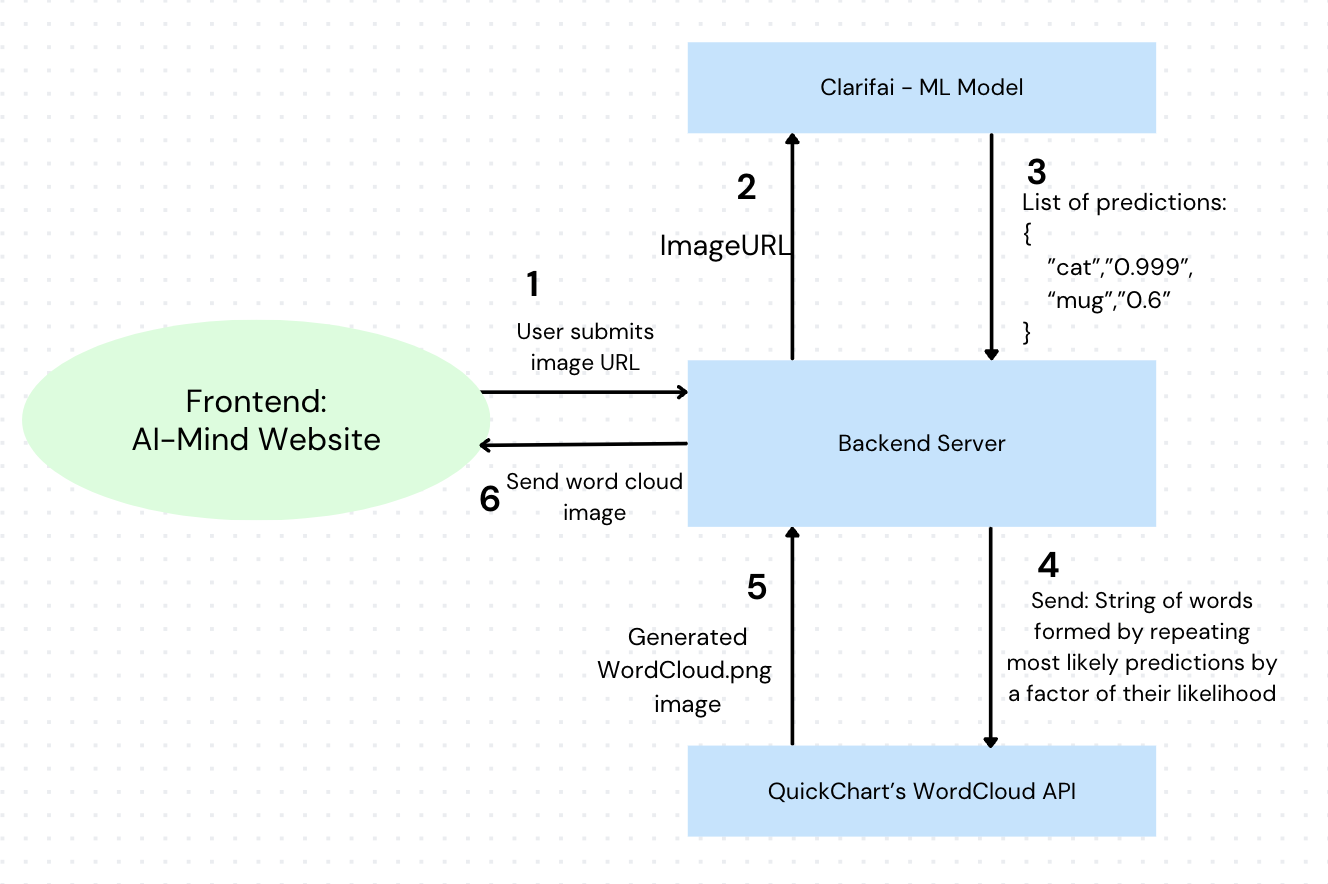

A user enters an image URL link in the input box and clicks on the "Submit" button.

The image URL is sent to Clarifai's API where fetched and processed by the machine learning model over there, which identifies certain parts of the image that it has seen in its training data. That's about 1000 different classes that it can label (cat, dog, coffee, purse, etc.)

The model generates a list of all the class labels it thinks are present in the image along with it's confidence scores for each one.

QuickChart's wordcloud API generates a wordcloud image from a single string, based on the frequency of words. One way we can form our word cloud is by repeating each word in the predictions list by a factor of it's respective prediction. This way, the more confident predictions occur more frequently and are given more importance (a bigger font size and space in the center) in the wordcloud.

What makes AI-Mind different?

I don't think I've ever seen something cool like this. We all know that there are a lot of advanced machine learning models that are used to improve our experiences with technology like image search, image tagging, auto caption generation, and many more. I thought it would be really cool to see a part of how it all happens.

How was it built?

AI Mind is built using HTML, CSS, and ReactJS (JavaScript) for the frontend. The backend is built using Node.js and Express.js.

The machine learning model used is Facebook(Meta)'s Image Recognition DEIT base model (that was trained on 1 million images and can classify 1000 classes), which is hosted on Clarifai's API and the wordcloud is generated using QuickChart's wordcloud API.

The Flowchart!

How can it be better?

The input is currently limited to image URLs. I think it would be cool to have an option to upload an image from your machine directly. I would also like to add more features like the ability to save the wordcloud image, and maybe share it with others on social media.

How was my experience?

I had a lot of fun building this project. I learned a lot about APIs, how to use them, and how to integrate them into my projects. I also learned about how we can use machine learning models as a web developer and how they can be used to improve our experiences with technology.